AI Is Finally in Production, So Why Aren’t People Using It?

Every week, we interview practitioners and distill industry podcasts and conferences into what you need to know

In today's issue:

AI is moving from lab to production… but user adoption is still the biggest challenge

Automating entry-level jobs today will create a leadership crisis in 5 years

Liberty Mutual is building AI fluency to combat the expectation gap between what business leaders think AI can do and what it takes to make it work

1. AI is moving from lab to production… but user adoption is still the biggest challenge

InsurTech Geek Podcast with James Benham, CEO @ JBK and Terra, and Rob Galbraith: InsurTech 2025: A Year in Review & Predictions for 2026 (Dec 26, 2025)

Background: James Benham runs a 300-person firm building technology for carriers, MGAs, TPAs, and brokers. Rob Galbraith is the "most interesting man in insurance." Together they've watched InsurTech mature from "healthy teenage puberty" to legitimate enterprise transformation. Their 2025 verdict: AI finally moved from pilots to production. They're doing med record summarization on millions of records. Claim-level summaries. Predictive modeling. And they're building omnimodel agents for 2026. But here's the uncomfortable truth: the hardest part of every implementation is still getting users to actually use it.

TLDR:

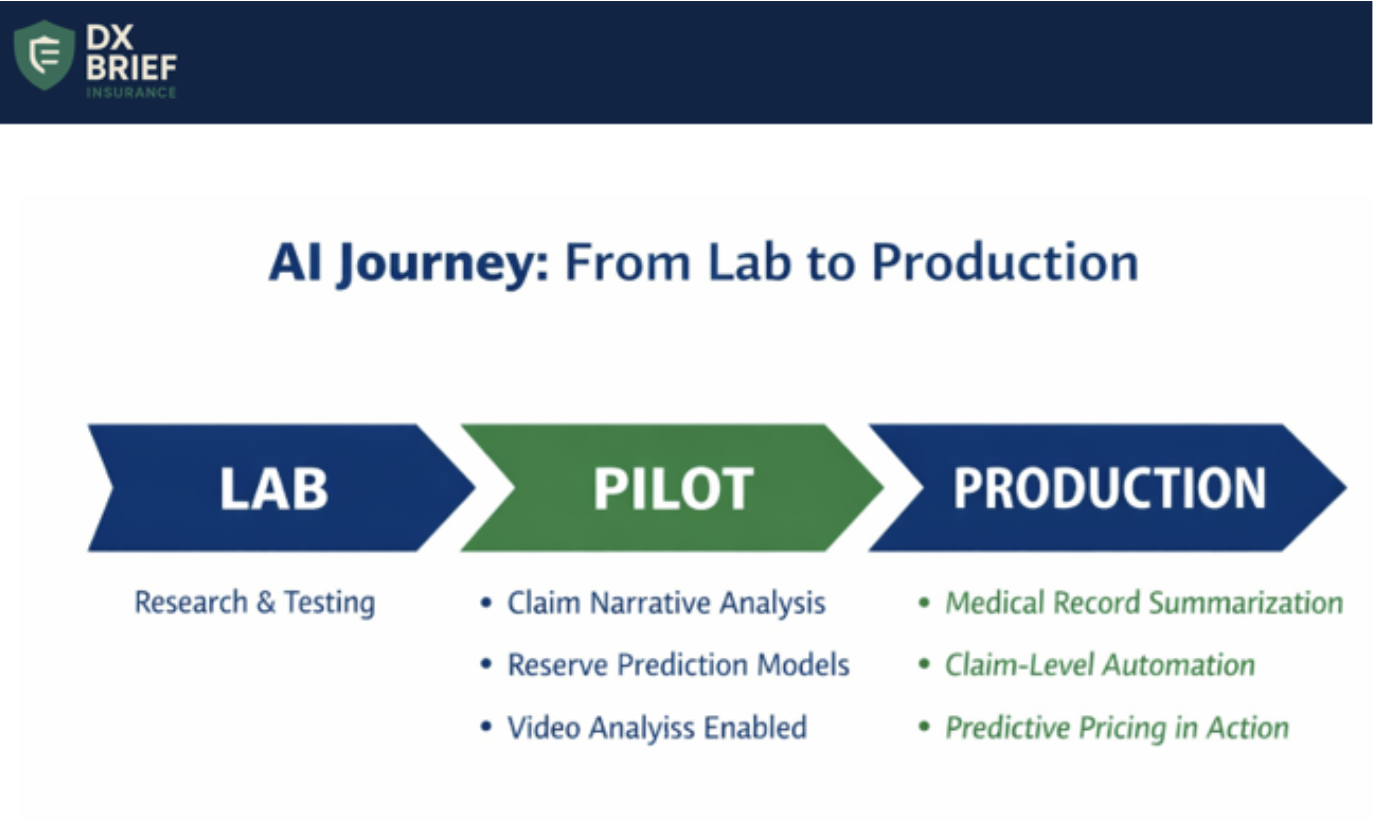

2025 was the year AI moved from pilots to production: carriers are now running med record summarization on millions of records, deploying predictive reserve models, and using machine learning for pricing recommendations in live environments.

Low-code platforms may be dying. With tools like Cursor and Claude Code, it's easier to build custom solutions than pay $4M/year in licensing for middleware that still requires engineers anyway.

User adoption is still 60% of the effort in the last 5% of the project.

AI moved from labs to production, and the gap between talkers and doers is widening. Benham doesn't mince words: "A year ago, we were still talking about lab projects. Labs, labs, labs, pilots, pilots, pilots. Now it's full-tilt implementation." His team is running med record summarization on millions of records, claim-level summaries, policyholder summaries, and predictive modeling for total incurred prediction.

The shift is seismic. "This year, for me, was a major year where I finally stopped saying 'we can't do that.' Before, clients would ask: Can we easily analyze a video? Yes, actually, now we can. Can we read all the claim narrative from recorded statements, call out keywords, summarize it, populate static fields? Yeah, we can do that now."

The implications for insurance executives: if your AI initiatives are still in "pilot" or "lab" status, you're falling behind. The leaders have moved to production. The laggards are still doing TED talks.

Cloud-native and AI-native are now table stakes, and low-code may be dead. Five years ago, large insurance companies said they didn't trust the cloud. Now they're in "full-tilt cloud deployment." Why does this matter? "If you really want to leverage AI frameworks, you actually need to have your unstructured and structured data in the cloud. You don't have to transmit it, the OpenAI APIs in Azure can hit those buckets directly."

Benham thinks low-code platforms are dying. "It's so much easier to use Cursor, Claude Code, and other tools to build custom solutions. You don't need a low-code platform sitting in the middle. These are really expensive. A big carrier pays $4 million a year just in licensing. And it's middleware. I think if I were a low-code company, I'd be really nervous."

The new requirement: "Cloud-native" means architected for the cloud, not lifted-and-shifted. "AI-native" means text summarization and machine learning models built in, not add-on modules you buy separately.

User adoption is 60% of the effort in the last 5% of the project. This is the insight that humbles every transformation leader: "The hardest part of all these implementation projects – whether it's two-way texting or record summarization or claim summarization or reserve prediction – is getting people to actually use it. It's the last 5% of the project and it's like 60% of the effort."

Benham's solution is obsessive UX optimization: "If you have reserve recommendations, they have to be front and center on the screen where the user is setting reserves. If you push it off-screen, users will not click on it. It's got to be literally 10 pixels above where they're making the decision."

His team uses Microsoft Clarity to track user behavior, including "rage clicks" (frustrated repeated clicking) and "dead clicks" (clicking on things users think are links but aren't). They take that data, have QA review it, and code corrections. "The end of all these iterations is a radically better user experience."

2026 prediction: The year of omnimodel agents. Looking ahead, Benham is building "omnimodel agents" – AI systems that can reference all different subsets of artificial intelligence in one workflow. "You can have it go on the web and do all the things an old RPA bot could do – log into a website, download a sheet – but then perform an analysis, do a summary, create a graphic, analyze photos, analyze videos, analyze free text, prepare a recommendation, predict a model outcome, summarize it into a report, and upload it to a website."

This is available now. And it's getting better: "Super agents are coming in 2026. You saw ChatGPT and others roll out agent mode this year. You're going to see far more capabilities in the next 12 months."

What to do about this:

→ Audit your AI initiatives: pilot or production? List every AI project in your organization. Mark each as "lab/pilot" or "production." If more than 30% are still in pilot after 12 months, you have an execution problem, not an innovation problem.

→ Implement user behavior tracking on every new deployment. Deploy tools like Microsoft Clarity to track rage clicks, dead clicks, and user flows. Assign someone to review sessions weekly and code UX improvements.

→ Start building your agentic AI roadmap. Identify 3-5 workflows where an omnimodel agent could handle end-to-end processing: web research + document analysis + summary generation + system updates. These are your 2026 priorities.

2. Automating entry-level jobs today will create a leadership crisis in 5 years

Streamlining Insurance with Tony Chimera, CAO @ Westfield Specialty, Brett Carter, VP @ The Jacobson Group, and Steve Patscot, Partner @ Spencer Stewart: AI, Automation, and Digital Transformation (Dec 9, 2025)

Background: Tony Chimera of Westfield Specialty, Brett Carter of Jacobson Group, and Steve Patscot of Spencer Stewart represent decades of insurance HR leadership, and they're delivering a warning to transformation leaders: just because you can automate entry-level jobs doesn't mean you should. The short-term efficiency gains feel good for 24 months and become a talent crisis for the next 48.

TLDR:

At the heart of insurance is a relationship. Underwriters, claims professionals, and actuaries should use AI to spend more time on relationships and less on non-value-added work.

The talent pipeline problem is a math problem with a time delay. If you don't hire entry-level employees for two years, you won't have people with five years of experience when you need them in five years.

Development investment isn't an expense to cut in soft markets. It's preparation for the future. Companies that view training as discretionary spending will find themselves harvesting talent from competitors who invested.

Technical literacy plus soft skills. It’s not an “either/or”. Every insurance leader on the panel emphasized that they're looking for both. Technical literacy to keep pace with evolving technology, but also EQ, growth mindset, and curiosity.

The roles haven't fundamentally changed. Underwriters still underwrite, claims professionals still handle claims. What's changed is the expectation that everyone has both the technical capability to use new tools and the human skills to build relationships that technology can't replace.

The entry-level automation trap. Patscot delivers the panel's sharpest warning: "Just because you can automate all these entry-level jobs doesn't mean you should. In five years when you look for somebody with five years of experience, they're not there because you didn't hire them five years ago."

This is a math problem with a time delay. Chimera uses his internship program as an example: they receive 3,000+ applicants for 20 positions. Technology helps filter, but humans make final decisions. "I do not want my technology replacing human judgment," he says. "Companies need to pay attention to efficiency, but also ask: is the process efficient in the first place, or are we just building castles in swamps… taking a really bad process and teaching the terminator to deliver it for us?"

Development is investment, not expense. When markets soften, development budgets are often the first to be cut. Carter argues the opposite: "In a market that is largely soft right now, what a lot of companies do is view development as an expense. I would argue we need to be investing now to prepare ourselves for the future."

The retention math supports this. Patscot notes that employee referral is consistently the best source of hiring: good retention, effective cost, and built-in accountability. But employee referrals only work if employees want to stay and believe the company is investing in them. The moment that stops, recruiters start calling.

The 20% rule for personal development. Patscot offers a framework for insurance professionals navigating AI disruption: "Get 20% better every year, and if you land at 10%, you're probably well ahead of the pack." His broader point: "We're entering a world that’s changing faster than people are changing. As human beings, we can change faster… we just don't like it."

The fastest path to obsolescence is fear of technology. The fastest path to career resilience is continuous improvement, robust networks, and the flexibility to embrace change as a constant.

What to do about this:

→ Audit your entry-level hiring against your five-year talent needs. If you're automating roles today that feed your senior talent pipeline, model the gap and adjust. The 24-month savings isn't worth the 48-month crisis.

→ Protect development investment during soft markets. When budgets tighten, ring-fence training and early-career programs as strategic investments, not discretionary expenses. Present the ROI case in terms of future leadership pipeline.

→ Build AI implementation around advisor enhancement, not replacement. Every automation decision should pass the test: does this help our people spend more time on relationships and less time on non-value-added work? If it eliminates the relationship, question the decision.

3. Liberty Mutual is building AI fluency to combat the expectation gap between what business leaders think AI can do and what it takes to make it work

MIT CIO Symposium with Monica Caldas, EVP & Global CIO @ Liberty Mutual Insurance; Melissa Swift, CEO @ Anthrome; and Reshmi Ramachandran, VP Partnerships @ Cprime (Jan 3, 2026)

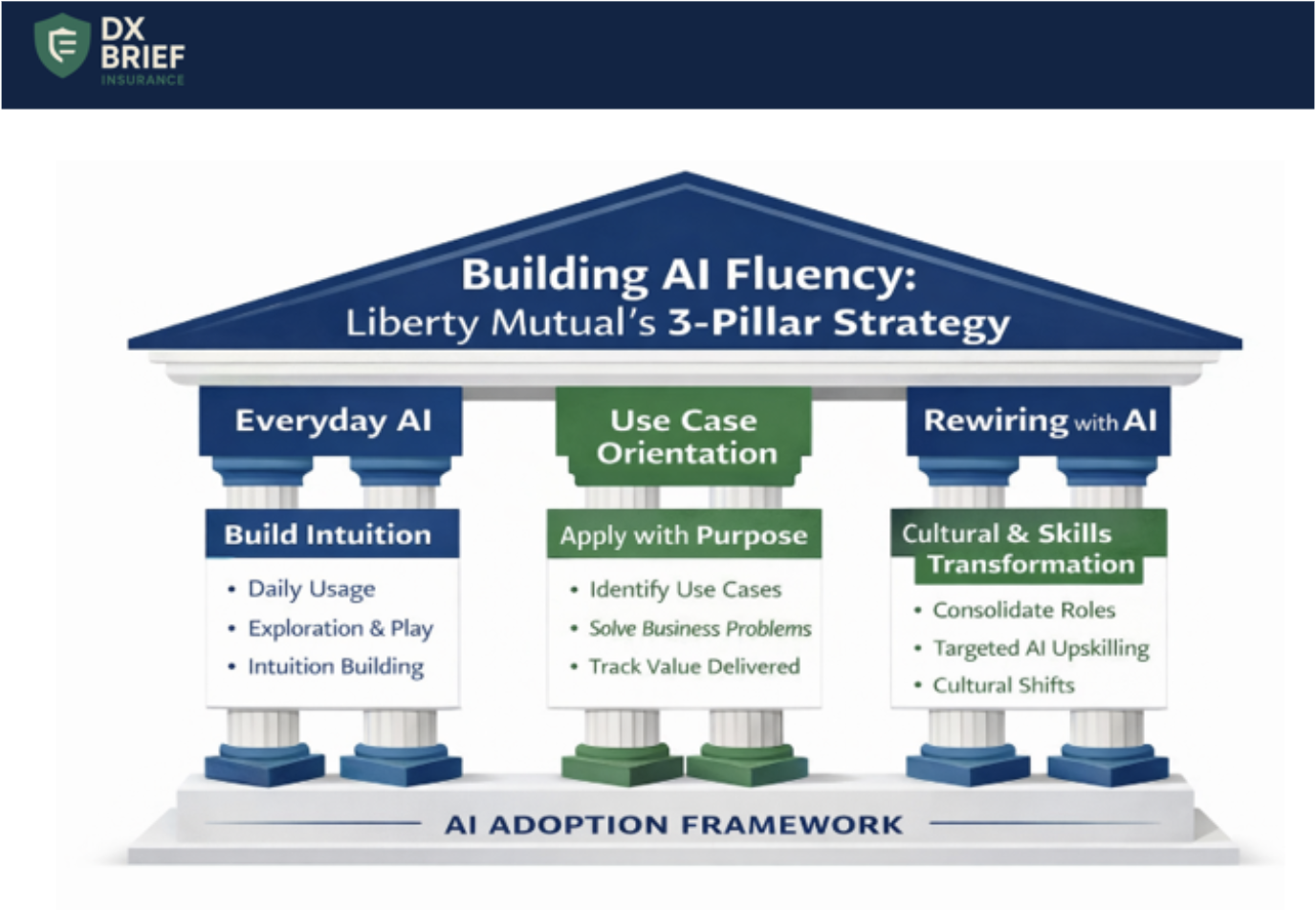

Background: Monica Caldas, CIO of Liberty Mutual has developed a three-pillar framework for AI adoption that addresses the most common failure point: the expectation gap between what business leaders think AI can do and what it actually takes to make it work. Her approach has transformed how Liberty Mutual thinks about skills, moving from fragmented job descriptions to a unified framework of 6 job families and 30 roles.

TL;DR:

Liberty Mutual deploys AI through three pillars: Everyday AI (daily usage to build intuition), Use Case Orientation (value-driven deployment), and Rewiring with AI (deeper cultural transformation).

The biggest obstacle isn't technology. It's the expectation gap where leaders overestimate what AI can do out-of-box while underestimating what it takes to make AI optimal. IKEA retrained 8,500 call center agents as design consultants after AI automation, generating $1.4B in additional revenue.

Build intuition before building use cases. Liberty Mutual launched "Liberty GPT" within two weeks of their Microsoft partnership becoming available in early 2023. But the first pillar isn't about deploying sophisticated solutions. It's about everyday AI usage.

Caldas explains: "You have to develop an intuition about what AI can and cannot do. There's not enough training that I'm going to provide that expresses it can hallucinate, it can do this, it can do that. You actually have to have an intuition, and when you do, you will start to have flight of imagination about what it can do for my team, for my role."

Most insurers jump straight to use case development. Liberty Mutual recognized that without widespread hands-on experience, leaders can't distinguish between realistic opportunities and vendor hype. The everyday AI pillar creates organizational antibodies against the expectation gap.

Simplify skills before you can develop them. Before tackling AI skills specifically, Caldas' team had to clean up their skills data. They had "lots of roles with lots of different names that kind of did the same thing and didn't do the same thing."

They reduced role variation, landing on 6 job families and 30 roles, each with detailed skill descriptions. Every employee now has a skills profile, enabling targeted training based on actual capability gaps.

When headlines trumpet "prompt engineering is the new hot job," Liberty Mutual breaks that down into component skills within their existing library.

What to do about this:

→ Launch an "everyday AI" initiative before building use cases. Deploy enterprise-grade AI chat tools (with appropriate guardrails) and encourage exploration. Measure adoption rates across departments. The goal isn't productivity gains yet. It's building organizational intuition about AI capabilities and limitations.

→ Audit your skills architecture. Count how many unique job titles exist in your technology organization. If the number exceeds 30-40, you likely have redundancy that prevents meaningful skills development. Map skills to capabilities, then capabilities to strategic priorities.

→ Reframe AI discussions from "jobs" to "tasks." When employees ask "What's going to happen to my job?" – and they will – reorient to tasks. Some tasks become more efficient with AI; this creates capacity for higher-value work. The IKEA example proves this: customer service agents became design consultants, generating $1.4B in new revenue.

Disclaimer

This newsletter is for informational purposes only and summarizes public sources and podcast discussions at a high level. It is not legal, financial, tax, security, or implementation advice, and it does not endorse any product, vendor, or approach. Insurance environments, laws, and technologies change quickly; details may be incomplete or out of date. Always validate requirements, security, data protection, regulatory compliance, and risk implications for your organization, and consult qualified advisors before making decisions or changes. All trademarks and brands are the property of their respective owners.