Why Most Insurance AI Chatbots Fail, and What Actually Works

Welcome to DX Brief - Insurance, where every week, we interview practitioners and distill industry podcasts and conferences into what you need to know

In today's issue:

Why your AI Chatbot investment is failing… and what Aventum's CTO learned from building the London Market's largest tech transformation

Oscar Health deployed 30+ LLMs while most insurers still debate their first AI use case

How Tata AIA's chatbot handles 15 million interactions at 98.6% accuracy

1. Why your AI Chatbot investment is failing… and what Aventum's CTO learned from building the London Market's largest tech transformation

Virtual Lunch Podcast with Hasani Jess, CTO at Aventum Group: Why Insurance Tech Has Failed (And What Comes Next) (Dec 11, 2025)

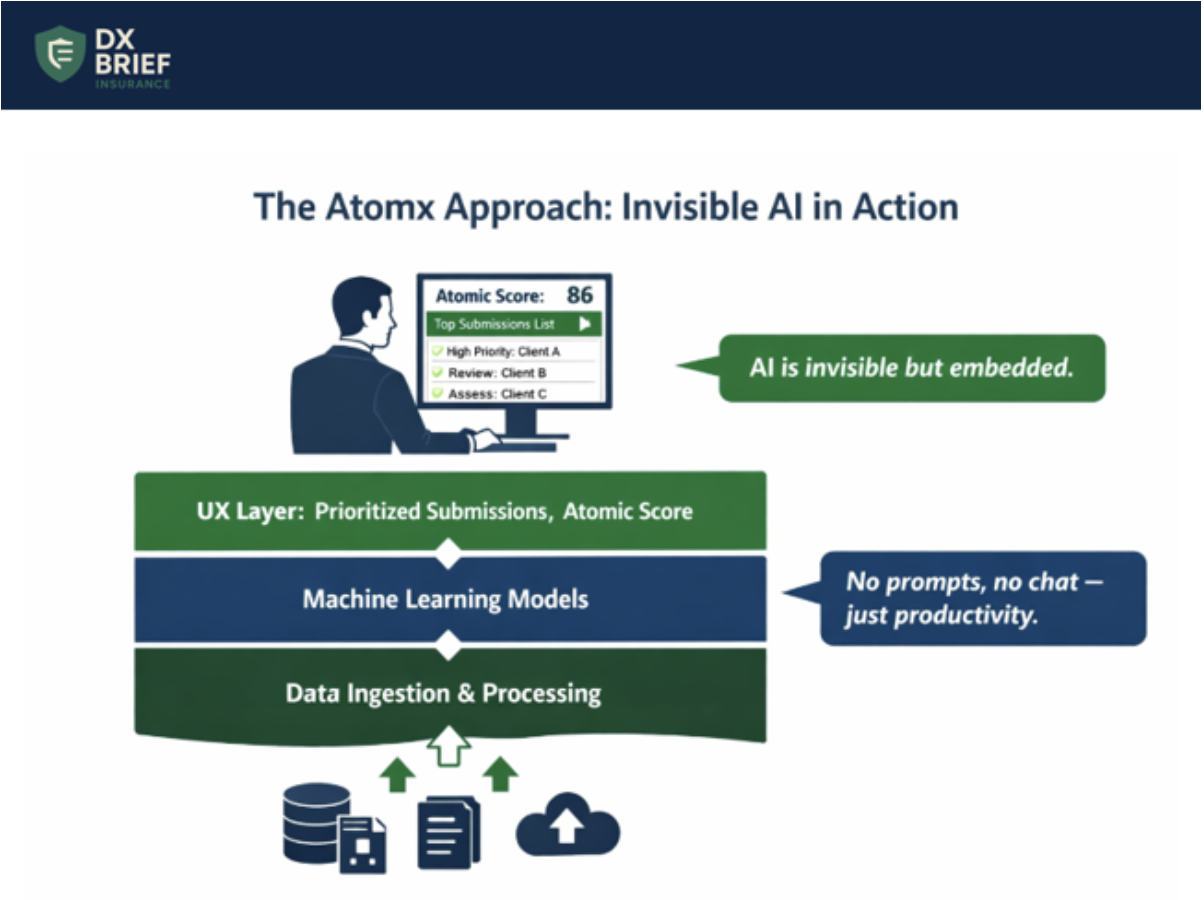

Background: Hasani Jess leads one of the largest technology transformations in the London insurance market – 140 tech staff, nearly 25% of Aventum's workforce, building Atomx from scratch. His verdict on why most insurance technology investments fail: they're point solutions that create "sticky tape people" whose job is keeping disconnected systems together. The real opportunity isn't better chatbots, it's embedding AI invisibly into end-to-end workflows so underwriters never think about AI at all. They just work faster.

TLDR:

Point solutions guarantee sticky tape. Aventum found "lots of fantastic solutions" in the market, but the handoffs between them created subprocess armies. Atomx was built to eliminate that integration tax entirely.

AI chatbots fail because work doesn't happen in chatbots. The real power comes from embedding AI into existing workflows so users don't notice it. When underwriters see a prioritized action list, they shouldn't care whether AI generated it.

Visual transformation beats documentation. Instead of spending months on Business Requirement Documents guaranteed to be wrong, Aventum uses wireframing to capture edge cases earlier: "Oh, but for this particular client, that process doesn't work, so we do this hack."

Point solutions create sticky tape armies. When Jess joined Aventum, they had "lots of fantastic solutions" already deployed. The problem? "They're all point solutions. There's lots of handoffs, lots of disconnectivity, lots of sticky tape that you need to put in between."

Insurance is complex enough – specialty and reinsurance complexity "explodes" the moment pen hits paper. Adding disconnected tools means adding people whose sole purpose is making systems talk to each other.

Atomx exists to eliminate that integration tax by supporting "the activity of an insurance company from start to finish" – delegated authority, binders, underwriting, finance, and broker lifecycle in one platform.

AI is only as powerful as the workflows you embed it in. Jess is direct about the AI hype cycle: "AI is an amazingly powerful tool, but it's only as powerful as the workflows that you can embed it in."

The fixation on chatbots is a dead-end because "our work doesn't happen in chatbots." When you bolt a chatbot onto existing processes, you've just created more sticky tape.

The alternative: embed AI invisibly into workflows. When Atomx underwriters see a prioritized submission list, "they're not thinking, oh, this was AI that's done this for me—it's just, okay, I've got some work to do." The AI disappears. The productivity remains.

Simplify complexity through Apple-inspired design… but know the limits. Aventum uses design principles "inspired by Apple" to reduce cognitive load.

Their Atomic Score works like a credit score for submissions. Complex risk analysis distilled into a single number underwriters trust.

But Jess acknowledges reality: "There's a limit to how simple you can make something without making it non-functional anymore." The goal isn't dumbing down. It's handling complexity in the platform so users face only the decisions that require human judgment. Error rates become design feedback: "If you're seeing constant errors in your data, for us, that's a signal there's something wrong in the UX."

Visual transformation beats documentation theater. Most transformations spend months producing Business Requirement Documents that are "guaranteed to be wrong" because they miss the hacks, edge cases, and client-specific exceptions that only emerge in conversation.

Aventum's alternative: wireframing-led visual transformation. "We're going to show you the journeys you’ll go through and use that as our documentation."

This approach surfaces the "oh, but except when this happens" moments earlier and creates buy-in simultaneously.

The team intentionally avoided hiring insurance experts for the tech function – bringing in people from music, healthcare, and telecoms who "were open to learn" rather than assuming they already knew the domain.

What to do about this:

→ Map your sticky tape headcount. Count the people whose primary job is moving data between systems, reconciling outputs, or managing handoffs. That number represents your integration tax, and your opportunity cost if you consolidated platforms.

→ Audit your AI investments against actual workflows. For each AI tool or chatbot, identify where in the user's day it sits. If accessing the AI requires leaving their primary workflow, you've created friction that will kill adoption. Redesign to embed AI outputs invisibly into existing processes.

→ Convert your next BRD into a wireframe sprint. Take a process currently documented in requirements and rebuild it as clickable wireframes. Test with actual users and count how many "oh, but except for this client" moments surface that the document missed.

2. Oscar Health deployed 30+ LLMs while most insurers still debate their first AI use case

Analyzing Healthcare Podcast: Mark Bertolini on Reinventing Health Insurance (Dec 8, 2025)

Background: Mark Bertolini – former Aetna CEO who led a $69B company – is now running Oscar Health, a $12B insurer with an NPS of 68 in an industry that averages zero. The difference? Oscar operates the first new insurance platform built since 1972: digital-native, cloud-based, single data model. This has allowed them to deploy over 30 LLMs in production, launch two Agentic AI systems for patient-physician collaboration, and cut 100 software engineers because AI now handles basic coding.

Your platform determines your AI ceiling. Most insurers debate which AI use case to pilot. Oscar debates which of their 30+ deployed LLMs needs optimization. The difference isn't strategy; it's infrastructure.

Oscar built "the first new platform in healthcare since 1972." Digital native. Cloud-based. One set of data. One version of truth. When Bertolini mentions they reduced operating costs through back-end AI and launched patient-facing Agentic AI that calculates real-time out-of-pocket costs using member medical records and ChatGPT, he's describing capabilities that require unified data architecture.

Most insurers run on fragmented systems that make even basic AI deployment a multi-year integration project.

AI that replaces conversations fails. AI that enhances them converts. Oscar's Agentic AI doesn't bypass clinicians; it arms them. Patients and physicians collaborate through the system to plan next steps in care, understand authorization requirements, automate requests, and see cost implications instantly.

But Bertolini is explicit about limits: they caught suicide ideation in a pilot that AI missed. "Only a human would have recognized it because we know empathetically when we're talking to somebody."

The lesson: deploy AI on back-office processes aggressively, but move carefully on clinical interfaces where empathy matters.

Consumer choice changes market dynamics… if you let it. Oscar's individual ACA marketplace customers choose their own plans and networks, unlike employer-sponsored members who get three options from HR.

The result? Medical cost per member in ACA ($7,300) is lower than employer-sponsored insurance ($9,437), Medicare ($14,754), and Medicaid ($8,200).

When consumers invest their own dollars, they engage differently, which is why Oscar's diabetes product saw unexpected adoption from pregnant Black women seeking gestational diabetes coverage. Real choice creates behaviors value-based contracts never could.

What to do about this:

→ Audit your platform's AI readiness score. Before debating use cases, map how many systems touch a single member record. If the answer is more than three, your AI deployment timeline just extended by 18-24 months. Consider whether point solutions or platform consolidation serves your five-year strategy.

→ Separate back-office and member-facing AI deployment tracks. Oscar runs aggressive AI automation on coding and operations while maintaining human oversight on clinical interactions. Define which processes can move fast (claims processing, coding, fraud detection) versus which require human-in-the-loop design (member communications, clinical decision support).

→ Build consumer intelligence infrastructure. Oscar's product innovation (diabetes plans, menopause products) came from watching how consumers chose coverage when given real options. If you only see members through employer-defined segments, you're missing behavioral signals that drive product-market fit.

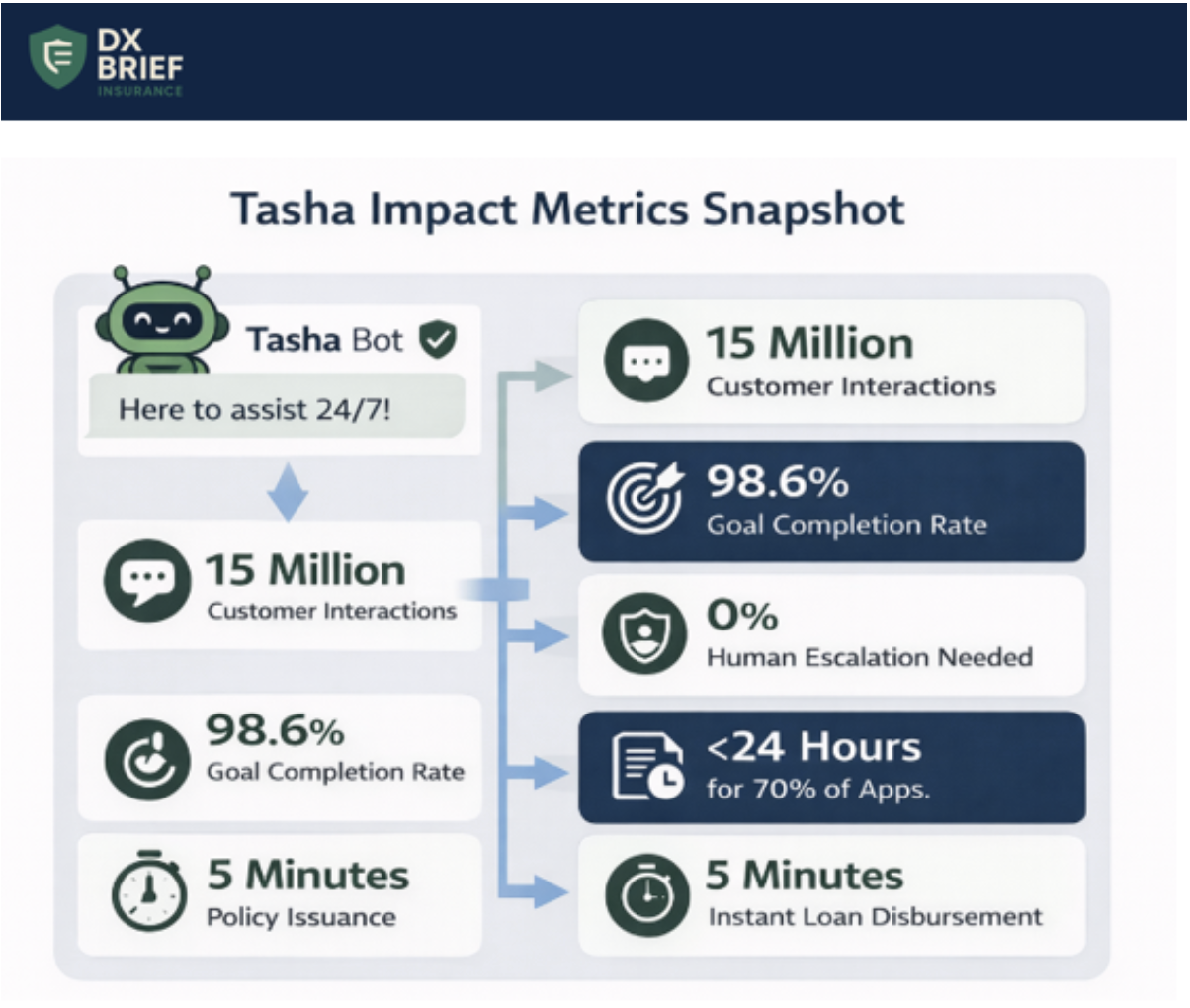

3. How Tata AIA's chatbot handles 15 million interactions at 98.6% accuracy

The Cover Story Podcast with Soumya Ghosh, CTO of Tata AIA Life Insurance: Future Ready Insurance (Dec 16, 2025)

Background: Tata AIA's chatbot Tasha has processed 15 million customer interactions with a 98.6% goal completion rate – with no human in the loop. CTO Soumya Ghosh says that's just the starting point.

TLDR:

Tata AIA's WhatsApp-enabled bot Tasha achieves 98.6% goal completion across 15 million interactions with no human escalation required, enabled by a "champion-challenger" monitoring architecture that validates every response in real-time.

Controlled ramp-up is non-negotiable: start with 5% of customers, choose tech-savvy demographics, monitor obsessively, then expand, because GenAI testing alone will never match production complexity.

Policy issuance dropped from weeks to sub-24-hours for 70% of applications and instant loan disbursement replaced 5-7 day cycles, proving that real-time service expectations can be met in life insurance.

Monitoring matters more than deployment. With GenAI, there's no amount of testing that prepares you for production. Tata AIA built a "champion-challenger" architecture where a background process continuously challenges every chatbot response, checking compliance, accuracy, and tone before the customer sees it. When the challenger identifies problems, it suggests corrections. This architecture acknowledges a truth most teams avoid: GenAI will hallucinate, so you build monitoring systems that catch hallucinations in real-time rather than hoping testing eliminates them.

Four chatbot generations in two years. The evolution path: menu-driven → NLP-based → first-generation GenAI → second-generation GenAI. Each generation taught lessons that shaped the next.

The key insight? Don't plan for a perfect chatbot launch. Plan for continuous evolution as the underlying technology transforms beneath you.

Ghosh notes the platform itself changes rapidly, so solutions that worked six months ago become obsolete.

Controlled ramp-up as discipline. Tata AIA's approach: deploy to 5% of customers, specifically targeting tech-savvy demographics first. Monitor continuously. Fine-tune. Then expand to the next cohort. Never remove the guardrails.

This controlled methodology allowed them to achieve 98.6% completion rates because they refined the experience incrementally rather than launching at scale and firefighting.

Real-time service kills the ticket queue. Most insurance apps still operate on 48-hour resolution windows: submit a request, get a ticket number, check back in two days.

Ghosh's team eliminated this entirely. Address changes, loan requests, policy queries – all instant. Their loan disbursement feature deposits money in customer accounts by the time they exit the portal.

This wasn't just about technology; it was about recognizing that modern customers have zero tolerance for ticket-based workflows.

Speed requires two-week sprint discipline. Ghosh runs transformation on two-week sprints with clear metrics defined upfront.

At each checkpoint, teams either hit targets, miss them, or discover they're headed in the wrong direction.

The discipline: never let a misguided initiative run beyond two weeks before correction.

As Ghosh puts it: fail fast, fix fast, and never run projects that stretch for years because the world changes faster than multi-year roadmaps.

What to do about this:

→ Build champion-challenger monitoring from day one. Don't deploy GenAI hoping testing covered edge cases. Architect a validation layer that checks every response against compliance rules and accuracy benchmarks before customer delivery.

→ Launch with 5%, not 100%. Resist pressure to scale immediately. Design your rollout in demographic cohorts, starting with customers most likely to tolerate imperfection. Use production data – not test scenarios – to refine before expanding.

→ Audit your ticket-based workflows. Identify every customer interaction that currently generates a ticket with a multi-day resolution window. Prioritize converting three of these to real-time resolution within the next quarter.

Disclaimer

This newsletter is for informational purposes only and summarizes public sources and podcast discussions at a high level. It is not legal, financial, tax, security, or implementation advice, and it does not endorse any product, vendor, or approach. Insurance environments, laws, and technologies change quickly; details may be incomplete or out of date. Always validate requirements, security, data protection, regulatory compliance, and risk implications for your organization, and consult qualified advisors before making decisions or changes. All trademarks and brands are the property of their respective owners.